1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

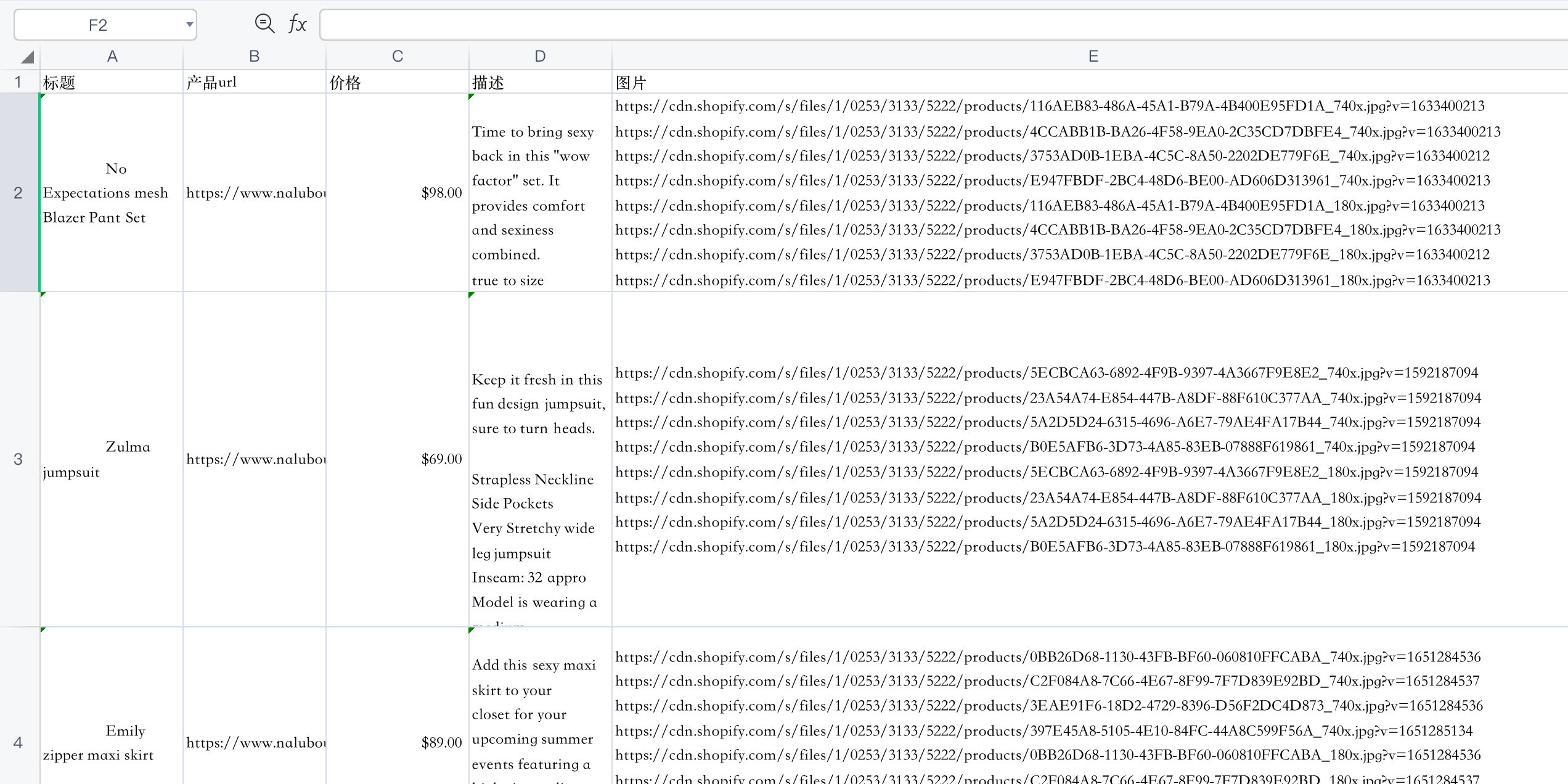

| import csv

import os

import re

import time

import bs4

import requests

url_pro = 'https://www.naluboutique.com/collections/all'

product_link_select = '.grid-product__content .grid-product__link'

product_title_select = '.product-single__title'

product_price_select = '.product__price'

product_desc_select = '.product-single__description'

product_img_select = '.product__photos img'

'''采集商品集合开始'''

def crawl_collections(url):

while True:

try:

res = requests.get(url, timeout=30)

res.encoding = res.apparent_encoding

print('请求', url, '状态', res.status_code)

res.raise_for_status()

break

except:

timeout = 3

print('链接失败,等待', timeout, '秒重试')

time.sleep(timeout)

print('')

print('重新链接中')

print('请求成功,开始获取商品链接')

noStarchSoup = bs4.BeautifulSoup(res.text, 'html.parser')

url = noStarchSoup.select(product_link_select)

for i in range(len(url)):

imgurl = domainWithProtocol + url[i].get('href')

print('获取产品url')

crawl_product(imgurl)

print('\n')

'''采集商品url结束'''

'''采集商品内容开始'''

def crawl_product(url):

print('开始请求产品页面', url)

while True:

try:

res = requests.get(url, timeout=30)

res.encoding = res.apparent_encoding

print('成功请求商品页面:', res.status_code)

res.raise_for_status()

break

except:

print('请求商品页面', url, '失败,重新链接')

noStarchSoup = bs4.BeautifulSoup(res.text, 'html.parser')

name = noStarchSoup.select(product_title_select)

name = name[0].getText()

price = noStarchSoup.select(product_price_select)

price = price[0].getText()

price = re.sub(' ', '', price)

price = re.sub('\n', '', price)

des = noStarchSoup.select(product_desc_select)

des = des[0].getText()

img = noStarchSoup.select(product_img_select)

l = []

if img != []:

for i in range(len(img)):

imgurl = img[i].get('src')

if imgurl is None:

imgurl = img[i].get('data-src')

if imgurl.__contains__('{width}'):

continue

l.append('https:' + imgurl)

l = '\r\n'.join(l)

fileHeader = ['标题', '产品url', '价格', '描述', '图片']

file = [name, url, price, des, l]

while True:

try:

csvFile = open(csv_name, 'a+', encoding='utf-8')

break

except Exception as e:

print(e)

print(csv_name + '文件写入失败,重试中。。。。。')

time.sleep(5)

size = os.path.getsize(csv_name)

writer = csv.writer(csvFile)

if size == 0:

writer.writerow(fileHeader)

writer.writerow(file)

csvFile.close()

else:

writer.writerow(file)

csvFile.close()

print('采集成功!')

if __name__ == '__main__':

protocol = 'https://'

domain = re.match('https://(.*)/collections', url_pro).group(1)

domainWithProtocol = protocol + domain

csv_name = domain + time.strftime('_%Y-%m-%d-%H-%M-%S', time.localtime(time.time())) + '.csv'

next = ['1']

n = 1

while next != []:

url = url_pro + '?sort_by=best-selling&page=' + str(n)

crawl_collections(url)

print('成功采集', n, '页')

n = n + 1

res = requests.get(url)

res.raise_for_status()

noStarchSoup = bs4.BeautifulSoup(res.text, 'html.parser')

next = noStarchSoup.select('.next')

print('全部采集完毕!!')

|